Joël R. Langlois

Seamless cross-platform apps, prioritising performance and UX.

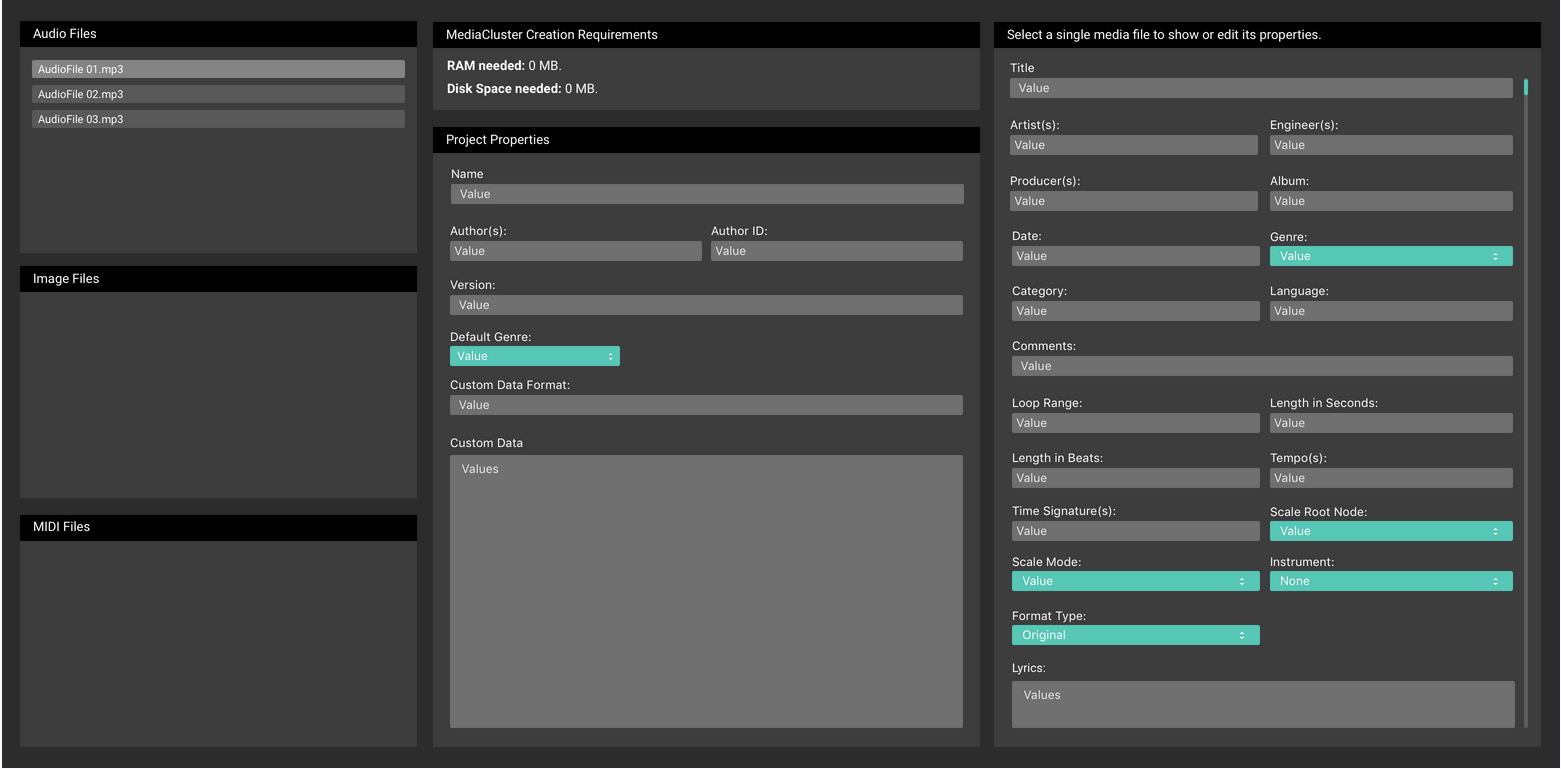

MediaCluster

A custom media container format.

Introduction

This project is a closed-source digital audio container format intended to act as a big-data package, not intended for streaming but for ease of sharing audio content.

Our goal with MediaCluster is to make the task of storing, sharing, and using studio stems as simple as possible across any platform (eg: desktop, mobile), all the while having minimal latency for high-performance real-time audio applications.

In terms of high-performance, this means not being restricted by internet bandwidth, and so deciding on avoiding streaming data - any delays in an audio pipeline will inevitably cause glitches, undesirable in professional audio environments. Even if streaming were supported, we would be reliant on internet connectivity: if internet was not available, player and studio applications would not be able to use a MediaCluster.

Existing media containers have either patent or legal or proprietary restrictions or are abandoned (eg: DVR-MS), are mainly video focused (eg: 3GP, Ogg), can be locked to specific platforms (eg: ASF on Windows) and even restrict the possible audio codecs that can be contained. For simplicity’s sake, MediaCluster is designed to allow containing all of the major audio codecs used by audio engineers, studio musicians and audiophiles (eg: WAV, AIFF, FLAC, Ogg Vorbis, MP3, WavPack), while foregoing video altogether.

MediaCluster makes it even easier to share by avoid the standard alternative approach to sharing sound packages: separate database files and folders of audio files in reference. This is avoided by serialising the database table side-by-side with the list of the contained audio files, thus making reading any data much more efficient and streamlined. The serialisation approach comes with the side-effect of extensibility - we now have the flexibility to satisfy any desired metadata by mastering engineers, including album images and covers, studio and artist identification for an entire MediaCluster, and even setting serial numbers.

We want to make sharing and utilising content an easier process for both content creators and users.

Utilising can mean either playback, or editing and remixing in an audio/music/video production environment.

Research

The problem is that we can’t just stream audio from the internet: this can cause delays and glitches in the audio pipeline due to slow network connections, and also locks any workstation projects to internet connections being present.

Instead, we are seeking a media container system to simplify this sharing process.

There are many existing media containers, but none of them seem to allow using all of the mainstream audio codecs.

We initially thought of using QuickTime, but have run into multiple issues. First, this format is not supported on all platforms, such as Android. The format has also been deprecated by Apple due to security flaws. And due to these flaws, Apple has dropped QuickTime in favour of a closed-source, Apple platform restricted system, titled AV Foundation.

Apple Ends Support for QuickTime for Windows; New Vulnerabilities Announced

Apple confirms QuickTime for Windows is dead, Adobe stuck between rock and hard place

On the other hand, containers like 3GP are heavily video focused and restrict the supported audio codecs to that of an officially registered list. As demonstrated in the table of Annex A.3 Usage of 3GPP branding, the codecs supported by this container are limited to highly specialised audio codecs for speech (eg: AMR, EVRC, SMV, VMR) and AAC.

Another alternative container, DVR-MS, has been designed and recently deprecated by Microsoft. This type is entirely proprietary and confined to Windows desktop systems, and is apparently slanted towards containing video, but little information about its specifications can be found.

It appears that Microsoft designed a successor to the DVR-MS format titled WTV. Basing ourselves on this Microsoft article, Working with WTV Files, the format seems locked down to Microsoft systems and limited to MP2 and AC3 audio codecs. But like the previous container, limited official documentation about its specification can be found.

In the end, it seems there is no audio container nor toolset to allow professional and hobbyist audio content creators to use audio codecs they already know, package them up, and share it all as a bundle on any platform.

In the context of native audio playback, operating system supported codecs are extremely fragmented. Each OS supports its own range of formats, some less common than others.

Apple lists the following audio codecs as supported on their systems:

AAC

MP3

WAV

AIFF/AIFFC

NeXT/Sun Audio

AC3

CAF

SD2

Microsoft lists the following audio codecs as supported on their systems:

AAC

MP3

WAV

AIFF/AIFFC

NeXT/Sun Audio

MP2

WMA

Android lists the following audio codecs as supported on their systems:

AAC

MP3

WAV

AMR

FLAC

Opus

OGG

First, we have had to narrow down the codecs to increase delivery time.

It should be noted that supporting a new codec is no easy feat. With that in mind, we have dropped support for platform-specific audio formats in order to keep our applications consistent on all current (ie: desktop) and potential (ie: mobile) platforms.

To start, we needed to narrow down which formats JUCE, a C++ audio app/plugin framework, supports for encoding and decoding. The result is a sharply narrowed list:

Ogg Vorbis

FLAC

AIFF

WAV

MP3

In addition, we have wrapped support for WavPack. It is certainly not a popular format, but it is free and open-source. The creators of this format provide a free C SDK which we were able to easily use to extend our list of supported codecs.

It looks like the mainstream audio formats aren’t supported.

And so the next best thing is to write our own container.

With this new found flexibility, we decided to conceptualise something more artist-oriented by supporting not only the codecs that we have available, but images, a large variety of preset metadata, and even potential for specifying a custom subformat and its file structure.

For the latter, this is can be entirely situation dependant, such as easily mapping a SoundFont in a MediaCluster, or even supporting an audio sampler MIDI keyboard layout.

With that, we have the advantage of using JUCE’s String system: metadata can be a mixture of any null-terminated ASCII, UTF8, UTF16 and UTF32.

Unsolved Challenge: Speeding Up Table Indexing

Creating the tables needing to outline the byte ranges for mapping the various media files into a table is inherently slow and tedious, depending on how many files are going to be contained in a MediaCluster.

You have to use a brute force method of iterating through every piece of media and caching their size. Then, you have to plan out how each piece of media is going to be ordered, caching each starting point and end point.

With hundreds plus files on a subpar computer, this can take upwards of a minute to generate - not ideal for impatient users, which is typically all of them!

I plan on multithreading this using a thread pool, and dividing the pool into jobs per media type found. This should speed up the process by splitting the amount of work per CPU core.

Unsolved Challenge: Reusing Existing Metadata Tags from Codecs

Seeing that it's painstaking to manually register metadata from audio files into a MediaCluster, automating reading the tags prepackaged with each audio file (if any are written) would spare a lot of effort on the user's part.

The downside is that there are many possible metadata formats. There are generic or standardised forms available such as ID3, APE and Vorbis comments, and each with their own many subversions. Even more difficult is parsing the non-standard, per-codec formats, as most of them aren't documented: we have to find and log every single tag by manually checking existing audio files.

Even if we managed to support checking for every type for every codec format, it would be lengthen the time needed to import all of the audio files - making it an even slower process.

If we count the list of possible tags from each metadata format, totalling around 300, that's how many we have to check for every single audio file you want to import. If we theorise this process takes about 3 seconds per audio file on a mid-end computer, and a user imports 100 audio files, we're looking at 300 seconds of processing time for import - or 5 minutes dedicated to parsing and containing audio files in a MediaCluster.

Not all codecs are the same: some don't support APE, and some don't support Vorbis, making the 5 minutes shorter. Regardless, it's added time and effort in processing.

Like the previously noted challenge, importing audio files and/or parsing the tags can likely be turned into a multithreaded process.